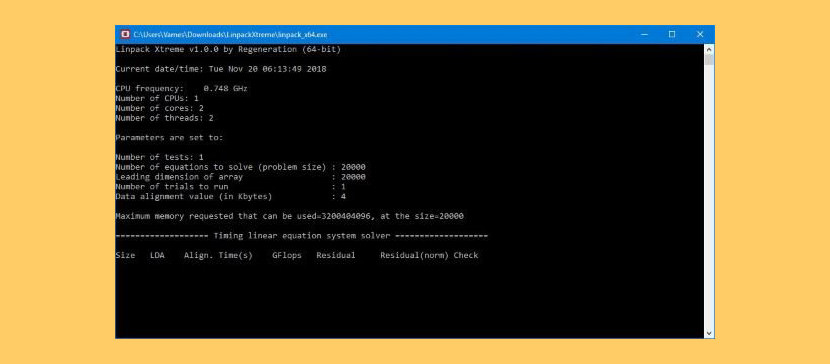

For the operations per cycle, we need to dig deeper and search additional information about the architecture. From the model name, I see that I have a Intel(R) Core(TM) i7-6700HQ CPU 2.60GHz, which means the average frequency is 2.60GHz. cat /proc/cpuinfoĪt the bottom of the cpuinfo of my laptop, I see processor: 7, which means that we have 8 cores. First, we’ll look at the number of cores we have. You will come below the theoretical peak FLOPs/second, but the theoretical peak is a good number to compare your HPL results. With benchmarks like HPL, there is something called the theoretical peak FLOPs/s, which is denoted by: Number of cores * Average frequency * Operations per cycle When you run HPL, you will get a result with the number of FLOPs HPL took to complete. HPL is measured in FLOPs, which are floating point operations per second.

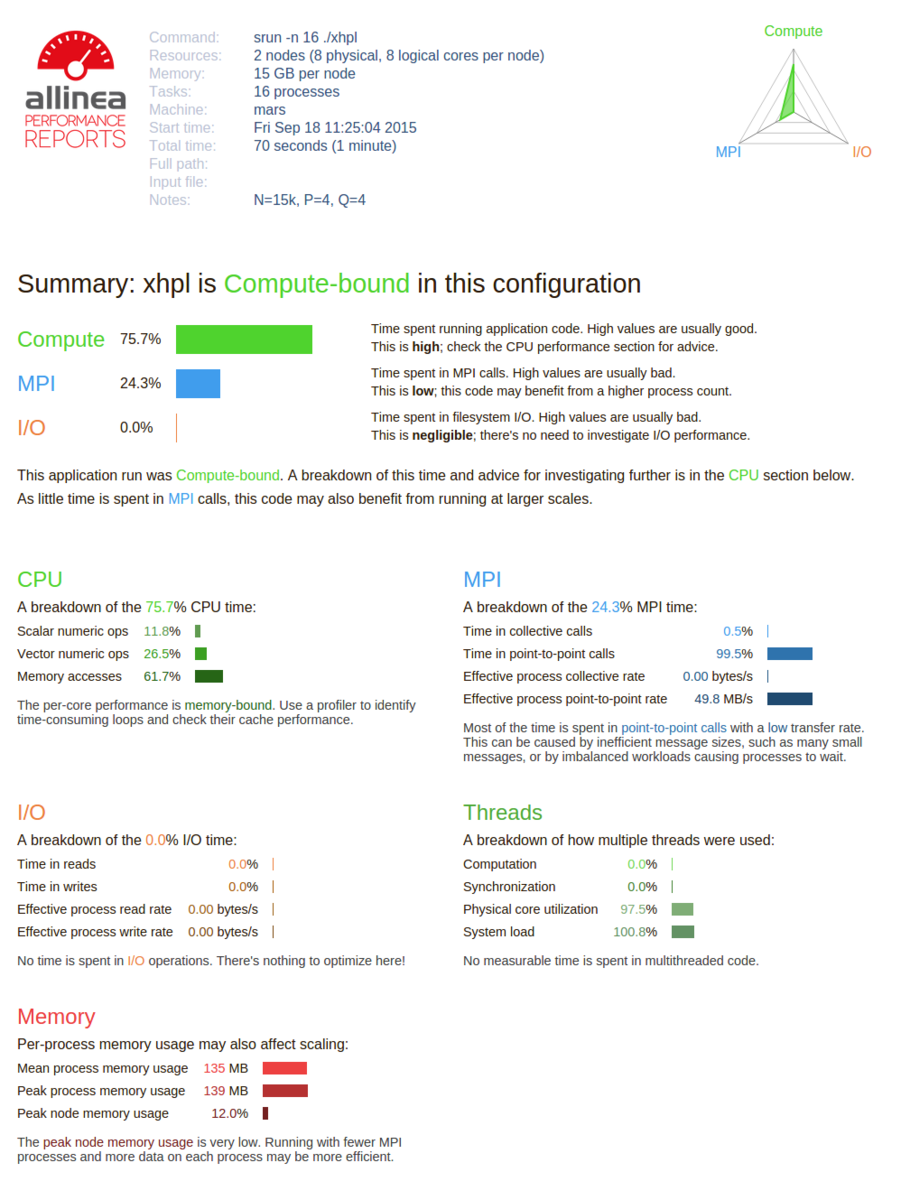

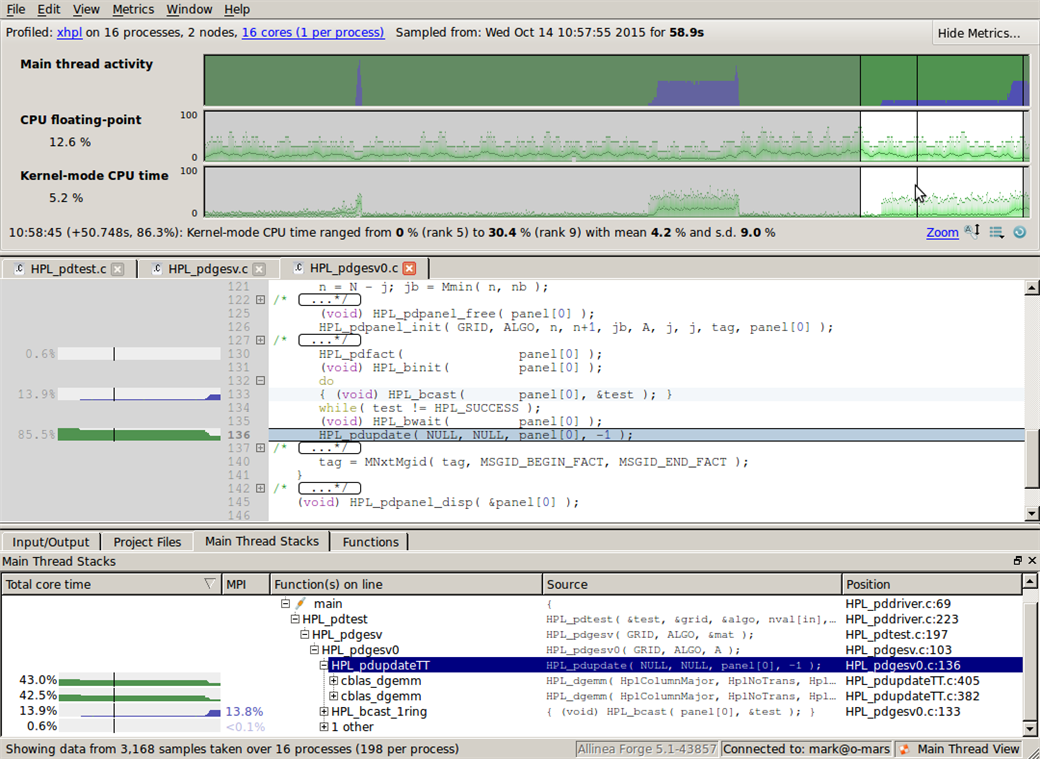

HPL measures the floating point execution rate for solving a system of linear equations. For more information, visit the HPL FAQs. Here’s what we did to improve performance across nodes, but before we get into performance, let’s answer the big questions about HPL. For some reason, we would get the same performance with 1 node compared to 6 nodes. At first, we had difficulty improving HPL performance across nodes. The specs are: 6 x86 nodes each with an Intel(R) Xeon (R) CPU 5140 2.33 GHz, 4 cores, and no accelerators. The wizard at can help you create one.We’ve been working on a benchmark called HPL also known as High Performance LINPACK on our cluster.

To run a larger LINPACK benchmark that uses more nodes, CPU cores, or memory, you will need to replace the existing HPL.dat file next to the xhpl binary with a new customized file. Should see a bunch of tests on the screen, with the following summary at the end: Finished 864 tests with the following results:Ĩ64 tests completed and passed residual checks,Ġ tests completed and failed residual checks,Ġ tests skipped because of illegal input values. The result of a successful compile should be the creation of the executable located inside the program directory " bin/GNU_atlas/xhpl" Program Execution - Basics If you screw up and get an error when compiling, make sure to clean out old compilation garbage before trying again: make clean_arch_all arch=GNU_atlas (satlas is the single-threaded version of Atlas, while tatlas is the multi-threaded version)Ĭompile for that specific architecture.

Further, libcblas.a is deprecated - use libtatlas.so instead. We'll be using the MPI compiler wrapper (mpicc) directly which provides this functionĬhange 4: The BLAS variables () need to point to the correct locations. Make the following changes to the Makefile:Ĭhange 1: ARCH needs to match the name of the Makefile:Ĭhange 2: TOPdir needs to be set to the folder containing the makefileĬhange 3: Clear out the MPI variable. (Press 'q' to quit the 'less' text viewer when finished): less INSTALLĬopy the Linux/Pentium-II Makefile and rename it to something representative of this current build: cp setup/Make.Linux_PII_CBLAS Make.GNU_atlasĮdit Make.Linux_PII_training in your favorite text editor: nano Make.GNU_atlas Don't download/install it on top of your other program! mkdir ~/linpackĬhange to the program directory: cd hpl-2.1 Load necessary module: module load mpi/openmpi-2.0.2Ĭreate a new directory (inside your home directory) for this program with the 'mkdir' command.

0 kommentar(er)

0 kommentar(er)